Tech Showcase: HDC Explainable Sensor Fusion on Low SWaP Systems

At Transparent AI, we're committed to developing explainable AI solutions that are not only powerful but also efficient enough to run on edge devices. Today, we're excited to share a demo in sensor fusion using Hyperdimensional Computing (HDC) that demonstrates remarkable resilience and explainability while maintaining extremely low power requirements.

Understanding Hyperdimensional Computing

Hyperdimensional Computing (HDC) is an emerging computational paradigm inspired by the high-dimensional representation principles observed in human brain functions. Unlike traditional neural networks, HDC operates on high-dimensional vectors (typically thousands of dimensions) called "hypervectors" that can encode complex information in a distributed, noise-tolerant manner.

Key properties that make HDC particularly interesting include:

Pattern-based representation: Information is encoded across the entire hypervector rather than in specific components

Compositionality: Multiple pieces of information can be combined in a single hypervector

Robustness to noise: High-dimensional spaces provide inherent noise tolerance

Explainability: Each operation has clear mathematical properties allowing traceability

Computational efficiency: Many operations can be performed with simple vector arithmetic

The Sensor Fusion Challenge

Sensor fusion refers to the process of combining data from multiple sensors to produce more accurate, complete, and reliable information than what could be achieved using individual sensors alone. This is particularly crucial for autonomous systems that must make critical decisions based on imperfect sensor readings.

Traditional approaches to sensor fusion often face challenges:

Computational intensity: Traditional methods often require significant processing power

Difficulty handling sensor failures: Many systems struggle when sensors malfunction

Lack of transparency: It's difficult to understand why fusion outputs particular values

Successfully implementing sensor fusion provides numerous benefits: improved accuracy, enhanced reliability through redundancy, extended spatial and temporal coverage, and reduced uncertainty. For critical systems like autonomous vehicles or medical devices, these benefits can be life-saving.

Our HDC Sensor Fusion Implementation

To demonstrate HDC's unique capabilities, we created a sensor fusion system utilizing:

Raspberry Pi 5 (16GB RAM) as our computing platform

BME680 Environmental Sensor providing temperature, humidity, and pressure readings

Custom HDC implementation with 10,000-dimensional vectors

Our implementation encodes each sensor reading into a hypervector, combines them into a fused representation, and then demonstrates the ability to extract individual components and detect anomalies – all while running on minimal power.

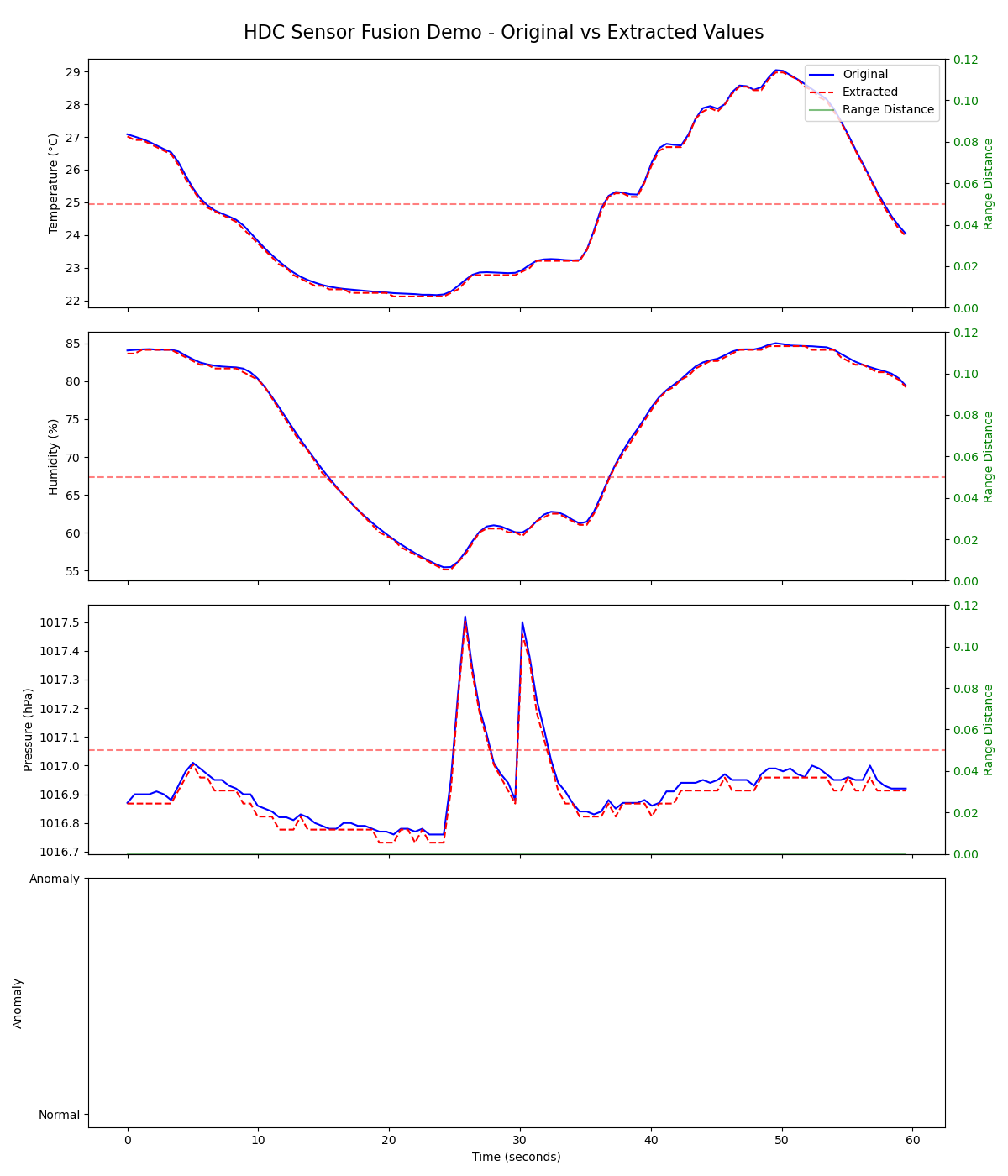

Demonstration Results: Perfect Extraction from Fused Representation

One of the most remarkable capabilities demonstrated was the ability to perfectly extract individual sensor readings from the fused representation. As shown in our testing, the "extracted" lines precisely followed the "observed" lines for each sensor under normal operating conditions.

This perfect extraction is significant for several reasons:

It demonstrates that no information is lost through the fusion process

It allows systems to maintain access to raw sensor data when needed

It enables detailed analysis of individual components despite using a unified representation

Robust Anomaly Detection

To test the system's ability to handle sensor failures, we intentionally corrupted the temperature readings during operation. The results were impressive:

The system immediately detected that temperature readings had moved outside the normal operating range

An anomaly flag was raised with a clear explanation of which sensor was causing the issue

The extraction process continued to deliver the best possible estimate of temperature despite corruption

This explicit anomaly detection provides critical transparency that is often lacking in neural network approaches – the system not only detects that something is wrong but can pinpoint exactly which component is causing the issue.

When the green, Range Distance line crosses the dashed red line, an anomaly is detected.

Resilience Through Sensor Corruption

Perhaps most impressive was the system's ability to maintain performance for uncorrupted sensors even while one sensor was deliberately corrupted. Our metrics showed:

Temperature extraction accuracy dropped from 100% to 57% during corruption (as expected)

Humidity extraction accuracy remained at 98% despite temperature corruption

Pressure extraction accuracy stayed at 99% throughout the temperature corruption period

This resilience demonstrates a key advantage of HDC-based fusion: the ability to maintain reliable operation of the overall system even when individual components fail. For critical applications like autonomous systems, this graceful degradation rather than catastrophic failure is invaluable.

Ultra-Low Power Operation

All of this sophisticated processing was accomplished with remarkably low power consumption:

Maximum power draw: 3.7 watts during processing

Idle power consumption: 2.4 watts

Net processing overhead: only 1.3 watts

For comparison, many embedded GPU solutions for sensor fusion require 10-50 watts. This order-of-magnitude improvement in efficiency enables entirely new classes of applications for sophisticated sensor fusion.

Applications for Low SWaP Systems

This ultra-efficient sensor fusion capability is particularly valuable for systems with strict Size, Weight, and Power (SWaP) constraints:

Unmanned Aerial Vehicles (UAVs): Extended flight time through power savings

Remote environmental sensors: Longer battery life for deployment in hard-to-reach locations

Wearable health monitors: Sophisticated sensor fusion without draining batteries

Autonomous robots: Enhanced perception capabilities within tight power budgets

Space systems: Reliable sensor fusion with limited power availability

For these applications, the combination of reliability, explainability, and low power consumption addresses critical challenges that have limited deployment of advanced sensor fusion techniques.

Future Development

While today's demonstration shows impressive results, we're already working on several advancements:

Expanding sensor types: Incorporating accelerometers, gyroscopes, and other sensor modalities

Adaptive fusion weights: Dynamically adjusting the influence of each sensor based on confidence

Temporal fusion: Integrating historical data for trend-based anomaly detection

Distributed computation: Implementing HDC-based fusion across multiple low-power nodes

Hardware acceleration: Exploring specialized chips for even greater efficiency

Each of these directions promises to further enhance the capabilities of HDC-based sensor fusion while maintaining the core benefits of explainability and efficiency.

Conclusion

At Transparent AI, we believe that the next generation of intelligent systems must not only be powerful but also explainable and efficient. Our demonstration of HDC-based sensor fusion on a Raspberry Pi showcases the potential of this approach to deliver sophisticated capabilities within strict power constraints.

For applications ranging from autonomous vehicles to wearable health monitors, this technology enables new possibilities for reliable, interpretable sensor fusion. We're excited to continue advancing this technology and bringing these benefits to real-world systems.